Thesis Topic

Table of contents

Problem Statement

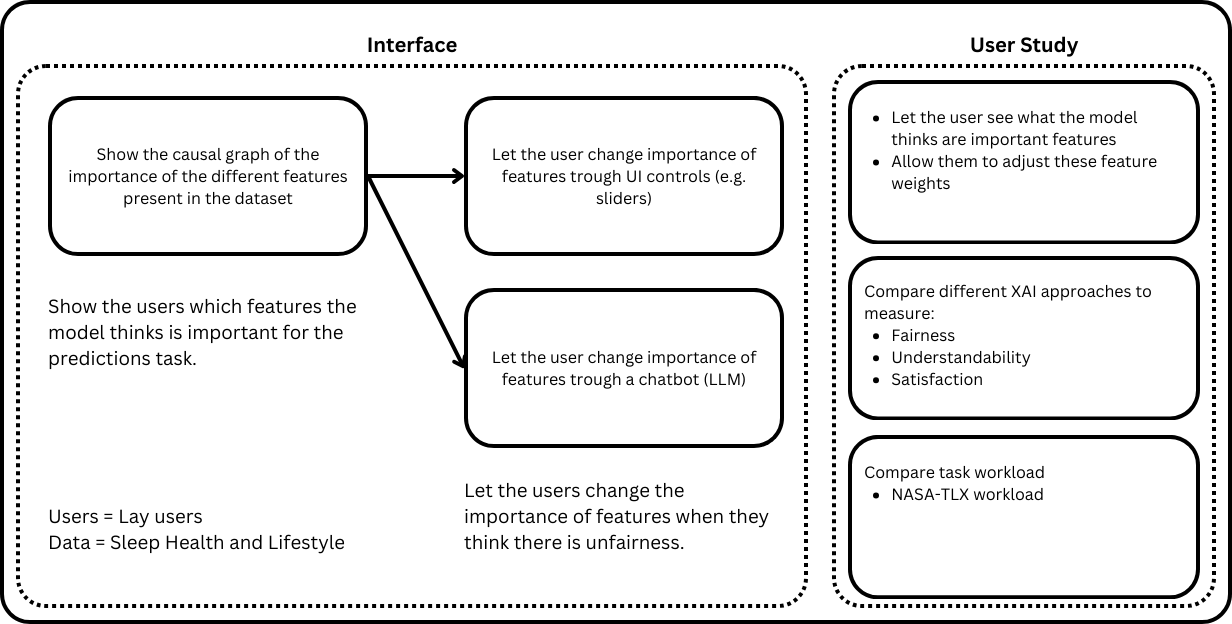

How can we make sure that AI models are more fair? When a model is trained on a dataset it will also incorporate the biases of the dataset. This can lead to unfair predictions. How can we make an UI that lets lay users easily adjust the importance of specific features, so everyone can look at which features the model thinks are important and easily and intuitively adjust them? Since a lot of the time there is some domain knowledge needed to interpret the importance features or AI knowledge to know how to adjust the model.

Research Objectives, Questions and Hypothesis

Make an intuitive UI so that lay users can easily notice bias issues. Let the user adjust the feature importance and compare features to get more insight to how specific features are correlated and discover bias issues. There will be two UIs, one where the user can adjust the feature importance trough a chatbot (LLM) and one where the user can adjust the feature importance trough UI controls (e.g. sliders).

- Design an IML (interactive machine learning) system that lets lay users assess the bias of ML models.

- Design model steering methods to allow lay users to adjust the feature importance and compare features to get more insight into how specific features are correlated and discover and reduce bias issues.

- Explore the impact of LLM-based chatbots for conversational explanations for understanding and reducing bias issues of ML models.

Research Questions and Hypothesis

RQ1: How do conversational explanations impact the understandability of bias issues of ML models? Hypothesis: The user can more clearly interpret the model’s bias trough conversational explanations.

RQ2: How do LLM chatbots facilitate users in model steering to reduce bias issues of ML models? Hypothesis: LLM chatbot-based model steering is better than manual model steering approaches for reducing bias issues.

RQ3: Do conversational explanations provide better causal reasoning of model bias than visual explanations?

Hypothesis: Conversational explanations provide better causal reasoning of model bias than visual explanations.

Dataset Description

This is a dataset about Differentiated Thyroid Cancer Recurrence.

Research Design

Between-subject study:

Group 1: Visual explanations + Manual Model Steering (without LLM based chatbot for explanations and steering)

Group 2: Visual + conversational explanations + (Manual + Conversational) Model Steering (with LLM based chatbots)

The kind of study depends on the amount of participants: < 10 for each group -> qualitative study, ~15-20 for each group -> mixed method study