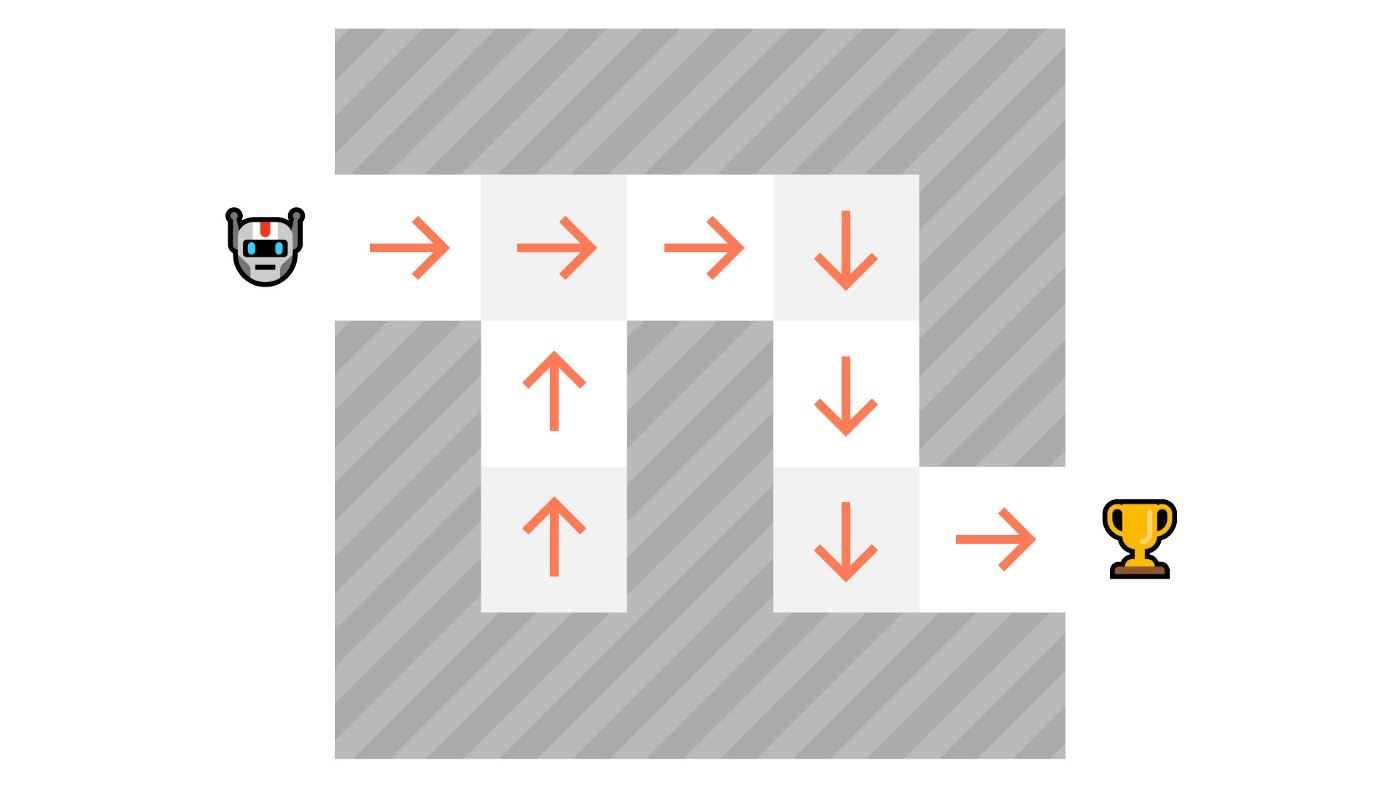

In Policy-Based methods, we learn a policy function directly.

This function will define a mapping between each state and the best corresponding action. We can also say that it’ll define a probability distribution over the set of possible actions at that state.

We have two types of policies:

- Deterministic: a policy at a given state will always return the same action. \(a = \pi(s)\)

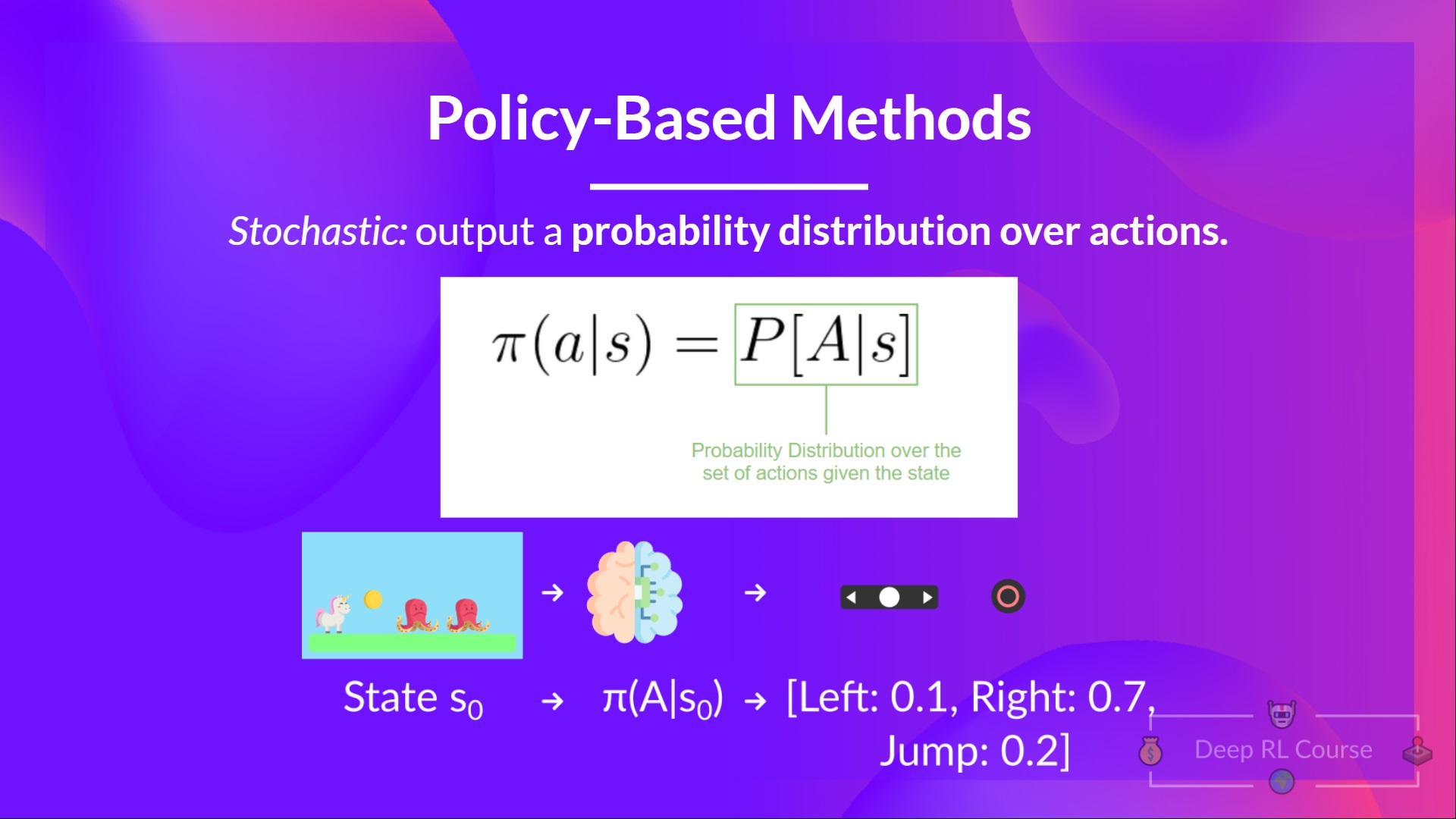

-

Stochastic: outputs a probability distribution over actions. (policy(actions | state) = probability distribution over the set of actions given the current state) \(\pi(a|s) = P[A|s]\) Another idea is to parameterize the policy. For instance, using a neural network \(\pi _\theta\), this policy will output a probability distribution over actions (stochastic policy). Our objective then is to maximize the performance of the parameterized policy using gradient ascent. To do that, we control the parameter \(\theta\) that will affect the distribution of actions over a state. These methods are called Policy-gradient methods.